AI surveillance systems now observe continuously, retain data longer, and influence decisions in ways that are rarely visible to the people being monitored. What began as a security upgrade has turned into a governance challenge, one where unclear data boundaries, retention policies, and accountability gaps quietly increase risk.

In 2026, privacy determines whether AI surveillance strengthens security or creates compliance exposure. This article breaks down what responsible surveillance actually requires and how privacy-first design changes how these systems operate in the real world.

- How AI surveillance privacy risks are evolving in 2026

- What privacy-by-design looks like inside modern surveillance platforms

- How Coram approaches AI surveillance with governance built in

How AI Surveillance has evolved since 2020

Since 2020, AI surveillance has shifted from simple monitoring to continuous interpretation. Cameras no longer just record footage for later review; they classify behavior, trigger alerts, and influence decisions in real time.

Advances in edge computing and hybrid architectures pushed intelligence closer to the camera, while cloud platforms expanded scale, retention, and cross-system access.

This evolution increased speed and coverage, but it also multiplied privacy exposure. More data gets collected by default. More teams gain access. More decisions happen automatically, often without clear limits on use, retention, or oversight. As surveillance became smarter, privacy risks moved from policy documents into everyday operations.

Where privacy concerns are most visible:

Schools: AI surveillance in schools often aims to improve safety, but it introduces sensitive trade-offs. Systems may monitor student movement, behavior, or attendance patterns, creating long-lived records tied to minors. Without strict data boundaries, surveillance can extend beyond safety into constant observation, raising concerns about consent, proportionality, and the long-term use of data.

Workplaces: In workplaces, AI surveillance frequently blurs the line between security and performance monitoring. Cameras paired with analytics can track presence, movement, and behavioral patterns, sometimes without clear disclosure. The privacy risk intensifies when surveillance data gets reused for productivity assessment, disciplinary decisions, or compliance reviews without transparent governance.

Public Spaces: Public surveillance has expanded rapidly since 2020, particularly with the adoption of smart cameras, facial recognition, and crowd analytics. While these systems support safety and operational efficiency, they also amplify concerns around mass data collection, anonymity erosion, and secondary use. In public environments, the lack of individual consent makes clear purpose limitation and data minimization critical.

Common Privacy Risks in Traditional AI Surveillance Systems

As AI capabilities expanded, many surveillance systems scaled intelligence faster than governance. The result is a set of recurring privacy risks that show up across industries, often hidden behind default settings and vendor limitations.

Always-On Facial Recognition

Facial recognition is frequently enabled by default and is not necessary. Continuous identification turns surveillance into identity tracking, even when safety objectives don’t require it.

Without strict controls, biometric data gets collected, processed, and retained far longer than intended, raising serious consent, compliance, and misuse concerns.

Centralized Video Data Hoarding

Traditional systems tend to centralize video data in large, long-retention repositories. Footage collected for one purpose often remains accessible for many others.

This “store everything, decide later” approach increases breach exposure, expands internal access risk, and makes data minimization nearly impossible in practice.

Black-Box AI Models

Many AI surveillance platforms operate as opaque systems. Teams can’t clearly explain how detections are made, why alerts trigger, or what data influenced decisions.

When models lack transparency, accountability breaks down, making audits, investigations, and regulatory responses harder than they should be.

Vendor Lock-In and Data Control Issues

Proprietary cameras, closed AI models, and restrictive data architectures limit how organizations control their own surveillance data.

Switching vendors, adjusting retention policies, or enforcing privacy rules becomes operationally expensive or technically blocked, leaving privacy decisions tied to vendor roadmaps rather than organizational policy.

What Privacy-Conscious Buyers Expect from AI Surveillance in 2026

By 2026, privacy-conscious buyers evaluate surveillance systems the same way they evaluate security controls: by what they enforce and not what they claim. Expectations have shifted from policy alignment to operational proof.

1. Purpose-Bound Surveillance

Surveillance must start with intent instead of just capability. Buyers expect systems to be configurable around specific use cases rather than broad, always-on monitoring.

They look for:

- Clear definitions of what is monitored

- Explicit limits on where AI is applied

- Controls that prevent surveillance from expanding beyond its original purpose

2. Enforced Data Minimization

The fastest way surveillance systems create privacy risk is by retaining data longer than their original purpose requires. Buyers now evaluate systems based on how precisely they can control collection, storage, and deletion, not how much footage they can retain.

What buyers expect:

- Retention rules that vary by location, use case, or event type

- Selective recording instead of default continuous capture

- Automated deletion that enforces policy without manual intervention

3. Explainable AI Outputs

If an AI system can’t explain why it flagged an event, it can’t be trusted in regulated environments. Buyers increasingly reject black-box detections that can’t be reviewed, justified, or challenged.

Expected capabilities include:

- Clear reasoning behind AI-triggered alerts

- Visibility into which inputs influenced decisions

- Review workflows that support audits and investigations

4. Distributed Processing Where It Reduces Exposure

Centralizing all video data increases privacy risk by design, especially when sensitive environments are involved. Buyers are favoring architectures that limit data movement unless it’s operationally necessary.

They look for:

- Edge or hybrid processing for real-time analysis

- Localized data handling for high-sensitivity areas

- Explicit control over when and how data leaves its source

5. Real Data Ownership and Vendor Flexibility

Privacy controls lose meaning when vendors dictate how data can be accessed, moved, or deleted. Buyers now treat data ownership and architectural openness as core privacy requirements.

Key expectations:

- Full access to and portability of surveillance data

- Policy enforcement independent of proprietary hardware

- Freedom to change vendors without breaking privacy workflows

6. Observable Privacy in Daily Operations

Privacy is not assessed at deployment; it’s evaluated through daily system behavior. Buyers expect surveillance platforms to make privacy controls visible and verifiable in real time.

They want visibility into:

- Who accessed the data and for what reason

- When AI features were enabled, modified, or disabled

- How privacy policies are enforced across teams and locations

Coram’s Privacy-First Approach to AI Surveillance

Coram designs AI surveillance with privacy as an operational boundary, not a compliance afterthought. The goal isn’t to collect more data but to collect only what’s necessary and make its use predictable, controlled, and auditable.

Privacy Built Into the System Architecture

Privacy decisions aren’t left to downstream configuration. They’re enforced by how the system is designed to function.

That shows up in:

- Guardrails that prevent AI capabilities from expanding silently

- System defaults that favor limited scope over blanket coverage

- Architecture that reduces unnecessary data movement and exposure

AI Capabilities Are Explicit Choices

Advanced analytics are powerful, but power without intent creates risk. Coram requires customers to deliberately enable AI features instead of activating them by default.

This ensures:

- AI use maps to a defined purpose

- Teams understand what the system analyzes and why

- New capabilities don’t appear without operational review

Facial Recognition Used Only With Intent

Facial recognition is not treated as a baseline surveillance tool.

Instead:

- It remains disabled unless explicitly configured

- Its use is scoped to investigations rather than continuous monitoring

- Biometric data collection is avoided unless clearly justified

This design limits identity tracking from becoming ambient surveillance.

Data Ownership Stays With the Customer

Control over surveillance data doesn’t end at access permissions. Coram keeps ownership and policy control of the organization that collects the footage.

Practically, this means:

- Customers define storage duration, access, and deletion

- Data isn’t locked behind proprietary restrictions

- Privacy policies remain enforceable even as environments scale

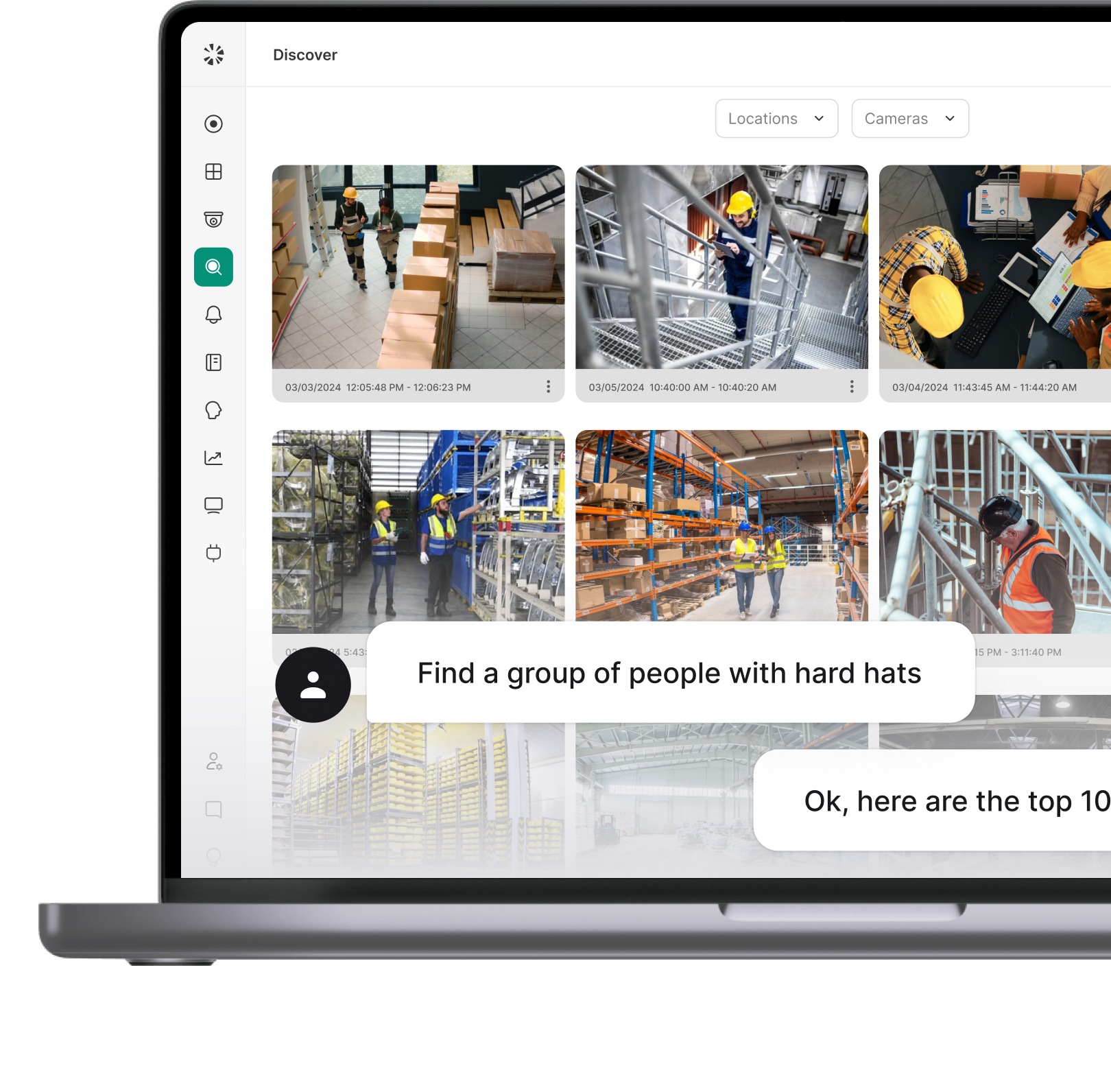

AI That Surfaces Events, Not Profiles

Coram’s AI prioritizes relevance without overreach. Search and analytics are designed to surface events and objects, and to build behavioral profiles.

Key characteristics:

- Natural language search without exposing personal identities

- Event-based detection rather than person-centric tracking

- Reduced noise without expanding surveillance scope

How Coram Handles Key Privacy Concerns Differently

Traditional AI surveillance systems optimize for coverage and capability first. Privacy controls are often layered on later, creating gaps between what the system can do and what it should do. Coram takes a different path by designing privacy into how surveillance is deployed, operated, and audited.

Here’s how Coram addresses the most common privacy risks in AI surveillance.

1. Privacy Controls Are Enforced at the System Level

Before organizations can govern surveillance responsibly, they need clear boundaries around what data is collected, processed, and retained. Coram enforces privacy at the architectural level, ensuring surveillance capabilities do not expand automatically as AI models evolve.

Privacy constraints are embedded directly into how video data flows through the system from capture to analysis to storage. This prevents silent capability creep and keeps surveillance aligned with its original intent.

Why does this matter? Privacy risk in surveillance rarely comes from misuse it comes from over-collection and unclear boundaries. System-level enforcement ensures privacy remains consistent even as environments scale.

2. AI Analytics Are Explicitly Opt-In

Once surveillance infrastructure is in place, controlling how AI is applied becomes critical. Coram requires customers to explicitly enable analytics instead of activating them by default.

Here’s how that control works:

This ensures analytics are tied to intent, not platform capability.

3. Facial Recognition Is Scoped for Investigation

Facial recognition introduces biometric risk and heightened regulatory exposure. Coram treats it as a targeted investigative capability rather than a baseline surveillance function.

Here’s how teams manage their use:

- Facial recognition remains disabled unless explicitly configured

- Use is limited to specific investigations

- Continuous identity tracking is prevented by default

If facial recognition is not required, it never becomes part of routine monitoring.

4. Customers Retain Full Ownership of Video Data

Controlling AI behavior is only part of privacy governance. Organizations also need authority over the data itself. Coram ensures customers retain ownership of their surveillance footage and full control over its lifecycle.

This includes:

- Defining retention periods

- Managing access across teams

- Enforcing deletion policies without vendor restrictions

The impact: By keeping data ownership with the customer, organizations can align surveillance practices with internal governance policies and evolving regulatory requirements without restructuring their surveillance stack.

5. Transparent AI Search Focused on Events

Finding relevant footage shouldn’t require exposing personal identities. Coram’s AI search prioritizes events, objects, and activity patterns rather than person-centric profiling.

Natural language search helps teams locate incidents quickly while keeping identity exposure minimal. Analysis remains focused on what happened, not who was observed.

This approach supports investigations without expanding surveillance beyond what’s operationally necessary.

AI Surveillance Privacy for Schools, Enterprises, and Cities

AI surveillance privacy doesn’t fail for the same reasons everywhere. The risk depends on who is being monitored, how much choice they have, and what happens to the data afterward. Schools, enterprises, and cities face fundamentally different privacy pressures and treating them the same is where systems break down.

Where Privacy Pressure Shows Up First

Schools: When Data Outlives the Student

In schools, the privacy risk is the duration. Surveillance data tied to students can persist long after incidents are resolved. Without strict limits, systems quietly accumulate behavioral histories that were never meant to follow individuals beyond school grounds.

Privacy-aware school surveillance narrows scope aggressively: short retention, incident-driven access, and analytics that activate only when safety thresholds are crossed.

Enterprises: When Surveillance Changes Power Dynamics

In enterprises, privacy friction appears when surveillance data intersects with authority. Cameras meant to protect facilities start influencing attendance, conduct, or productivity narratives.

The privacy challenge here is secondary use. Systems must prevent security data from being repurposed for employment decisions unless explicitly governed. Separation of access, audit trails, and purpose enforcement matters more than detection accuracy.

Cities: When Visibility Scales Faster Than Trust

Cities face the hardest problem: surveillance without consent at massive scale. Even well-intentioned systems trigger backlash when people don’t understand what’s being collected or why. Here, privacy hinges on proportionality. Limiting identification, minimizing retention, and communicating boundaries clearly determines whether surveillance is perceived as public safety or public monitoring.

The common thread: Across schools, enterprises, and cities, privacy failures don’t come from bad intent. They come from systems that don’t adapt their boundaries to context.

Privacy-aware AI surveillance isn’t about turning features on or off. It’s about designing systems that understand where they operate and enforce limits accordingly.

Privacy-First Surveillance, Done Right

You’ve seen how AI surveillance evolved, where privacy breaks, and what buyers expect in 2026. The win is clear: effective surveillance and privacy can coexist with the right design.

- Privacy risks rise when AI analyzes continuously without purpose limits, retention controls, or explainability.

- Schools, enterprises, and cities face different pressures, requiring context-aware guardrails rather than one-size policies.

- Buyers now expect opt-in AI, minimal data movement, customer-owned footage, and event-focused intelligence.

If you’re ready to apply these principles in practice, Coram delivers privacy-first AI surveillance built for real operations without forcing analytics, identities, or data-control tradeoffs.

FAQ

Traditional video surveillance records footage for later review. AI surveillance actively analyzes video in real time, detecting events, patterns, and anomalies automatically. This shift from passive recording to continuous interpretation increases capability, but also expands how much data is processed, how long it’s retained, and how decisions are made.

AI systems don’t just store video; they extract meaning from it. That includes behavioral signals, movement patterns, and sometimes identity-related data. Without strict controls, this can lead to over-collection, long retention, secondary use, and decisions that are hard to explain or audit.

In 2026, AI surveillance is shaped by a mix of data protection, biometric, and AI-specific regulations. These commonly address consent, purpose limitation, retention, transparency, and accountability, especially where facial recognition, public monitoring, or employee surveillance is involved. Enforcement is increasingly focused on how systems operate, not just what policies say.

Coram designs privacy into system behavior. AI features are opt-in, facial recognition isn’t mandatory, customers retain ownership of their video data, and analytics focus on events rather than profiling individuals. This keeps surveillance purpose-bound, explainable, and easier to govern over time.

.webp)

.webp)